This guide from Hany Farid gives you a mathematical viewpoint of common point use in Image Processing.

It's a good introduction.

Learn about the fundamentals of signal and image processing built upon a unifying linear algebraic framework.

http://www.cs.dartmouth.edu/farid/tutorials/fip.pdf

Other short guide are accessible here : http://www.cs.dartmouth.edu/farid/tutorials/

A bunch of news about Computer vision, Computer Graphics, GPGPU or the mix of the three....

Thursday, December 30, 2010

Tuesday, December 28, 2010

Sixth Sense interface

I really like the concept at 3 minutes by using the white page and a logo as a tracking pattern.

It's like a reverse movement captation (a computer vision gyroscope !).

SixthSense from Fluid Interfaces on Vimeo.

Found here http://vimeo.com/17567999

"'SixthSense' is a wearable gestural interface that augments the physical world around us with digital information and lets us use natural hand gestures to interact with that information. By using a camera and a tiny projector mounted in a pendant like wearable device, 'SixthSense' sees what you see and visually augments any surfaces or objects we are interacting with. It projects information onto surfaces, walls, and physical objects around us, and lets us interact with the projected information through natural hand gestures, arm movements, or our interaction with the object itself. 'SixthSense' attempts to free information from its confines by seamlessly integrating it with reality, and thus making the entire world your computer."

It's like a reverse movement captation (a computer vision gyroscope !).

SixthSense from Fluid Interfaces on Vimeo.

Found here http://vimeo.com/17567999

"'SixthSense' is a wearable gestural interface that augments the physical world around us with digital information and lets us use natural hand gestures to interact with that information. By using a camera and a tiny projector mounted in a pendant like wearable device, 'SixthSense' sees what you see and visually augments any surfaces or objects we are interacting with. It projects information onto surfaces, walls, and physical objects around us, and lets us interact with the projected information through natural hand gestures, arm movements, or our interaction with the object itself. 'SixthSense' attempts to free information from its confines by seamlessly integrating it with reality, and thus making the entire world your computer."

Friday, December 17, 2010

Bing Panorama generator

The Microsoft Bing application enable mobile phone to generate panorama with live sphere preview !

Thursday, December 16, 2010

Kinect body gesture recognition

As everyone know Microsoft do not provide his tech to recognize body movement.

By openSDK already exist and could be used !

I suggest everyone to look to openNI skeleton detection.

And a demo of the skeleton tracking output !

And Kinect with skeletal tracking from OpenNI

By openSDK already exist and could be used !

I suggest everyone to look to openNI skeleton detection.

And a demo of the skeleton tracking output !

And Kinect with skeletal tracking from OpenNI

Wednesday, December 15, 2010

Visual SLAM with Kinect

This video show VisualSlam with the Kinect camera. It's kind of pose registration and add scene completion with the new 3D point cloud.

Tuesday, December 14, 2010

3D Reconstruction with Kinect

This video shows the first results for 3D object reconstruction using the depth images from the Microsoft Kinect camera.

Data acquisition is as simple as moving the Kinect around the object of interest.

From there the raw data is processed in a two step algorithm:

1. Superresolution images are computed from several consecutive captured frames.

2. The superresolution images are aligned using a improved version of the global alignment technique from "3D Shape Scanning with a Time-of-Flight Camera - Yan Cui, et.al. CVPR2010".

Laboratory : Lab

Data acquisition is as simple as moving the Kinect around the object of interest.

From there the raw data is processed in a two step algorithm:

1. Superresolution images are computed from several consecutive captured frames.

2. The superresolution images are aligned using a improved version of the global alignment technique from "3D Shape Scanning with a Time-of-Flight Camera - Yan Cui, et.al. CVPR2010".

Laboratory : Lab

Wednesday, December 8, 2010

gDebugger is now FREE

gDEBugger GL is an advanced OpenGL Debugger, Profiler and Graphic Memory Analyzer, which traces application activity on top of the OpenGL API to provide the information you need to find bugs and to optimize OpenGL applications performance. And it is now available for free !

Download

Free licence here

Source : ozone3D

Download

Free licence here

Source : ozone3D

Tuesday, December 7, 2010

Virtual Plastic Surgery

Using an innovative VECTRA® 3-Dimensional camera and Sculptor™ software, developed by Canfield Imaging Systems (Fairfield, NJ) Dr. Simoni can provide plastic surgery patients with life-like simulations of their faces in 3 dimensional spaces.

Photogrammetry techniques, for the best, or the worse...

Source : here

This software could be also created one day for breast : remind the simulation software : http://smallideasforabigworld.blogspot.com/2009/11/breast-implant-simulator.html.

Libellés :

Computer Graphics,

computer vision,

Tech demo

Kinect on a quadrotor (UAV)

This video show the usage of a Kinect as a radar sensor on a autonomous quadrotor UAV.

More info here :

More info here :

Hybrid Systems Laboratory http://hybrid.eecs.berkeley.edu/

This work is part of the STARMAC Project in the Hybrid Systems Lab at UC Berkeley (EECS department). http://hybrid.eecs.berkeley.edu/

Researcher: Patrick Bouffard

PI: Prof. Claire Tomlin

Our lab's Ascending Technologies [1] Pelican quadrotor, flying autonomously and avoiding obstacles.

The attached Microsoft Kinect [2] delivers a point cloud to the onboard computer via the ROS [3] kinect driver, which uses the OpenKinect/Freenect [4] project's driver for hardware access. A sample consensus algorithm [5] fits a planar model to the points on the floor, and this planar model is fed into the controller as the sensed altitude. All processing is done on the on-board 1.6 GHz Intel Atom based computer, running Linux (Ubuntu 10.04).

A VICON [6] motion capture system is used to provide the other necessary degrees of freedom (lateral and yaw) and acts as a safety backup to the Kinect altitude--in case of a dropout in the altitude reading from the Kinect data, the VICON based reading is used instead. In this video however, the safety backup was not needed.

[1] http://www.asctec.de

[2] http://www.microsoft.com

[3] http://www.ros.org/wiki/kinect

[4] http://openkinect.org

[5] http://www.ros.org/wiki/pcl

[6] http://www.vicon.com

Researcher: Patrick Bouffard

PI: Prof. Claire Tomlin

Our lab's Ascending Technologies [1] Pelican quadrotor, flying autonomously and avoiding obstacles.

The attached Microsoft Kinect [2] delivers a point cloud to the onboard computer via the ROS [3] kinect driver, which uses the OpenKinect/Freenect [4] project's driver for hardware access. A sample consensus algorithm [5] fits a planar model to the points on the floor, and this planar model is fed into the controller as the sensed altitude. All processing is done on the on-board 1.6 GHz Intel Atom based computer, running Linux (Ubuntu 10.04).

A VICON [6] motion capture system is used to provide the other necessary degrees of freedom (lateral and yaw) and acts as a safety backup to the Kinect altitude--in case of a dropout in the altitude reading from the Kinect data, the VICON based reading is used instead. In this video however, the safety backup was not needed.

[1] http://www.asctec.de

[2] http://www.microsoft.com

[3] http://www.ros.org/wiki/kinect

[4] http://openkinect.org

[5] http://www.ros.org/wiki/pcl

[6] http://www.vicon.com

Thursday, December 2, 2010

Omni stereo camera

ViewPlus a Japonese company purpose fun OmniStereo camera :

I let you admire the works and camera placement ! and the three model Jupiter, Saturn and Venus

I let you admire the works and camera placement ! and the three model Jupiter, Saturn and Venus

| this cam get 60 eyes ! |

They are build on a basis component like this one :

If you want to know more : ViewPlus

Libellés :

computer vision,

Image processing,

Tech demo

The multitouch on a blended surface

The german university of Aachen is cirrrently working on a original multitouch interface. They purpose a bended multitouch screen. By using IR led an videoprojector.

Such project is interesting as more an more people have used to work with multi screen display.

I really like the idea that the band that are the more blended is used as a thumbnails view for photo sorting.

Such project is interesting as more an more people have used to work with multi screen display.

I really like the idea that the band that are the more blended is used as a thumbnails view for photo sorting.

Libellés :

Human Machine Interface,

Multitouch,

Tech demo

Wednesday, December 1, 2010

EC2 Instance Type - The Cluster GPU Instance

Amazon purpose now GPU clusters...

http://aws.typepad.com/aws/2010/11/new-ec2-instance-type-the-cluster-gpu-instance.html

Each cluster have the following configuration :

http://aws.typepad.com/aws/2010/11/new-ec2-instance-type-the-cluster-gpu-instance.html

Each cluster have the following configuration :

- A pair of NVIDIA Tesla M2050 "Fermi" GPUs.

- A pair of quad-core Intel "Nehalem" X5570 processors offering 33.5 ECUs (EC2 Compute Units).

- 22 GB of RAM.

- 1690 GB of local instance storage.

- 10 Gbps Ethernet, with the ability to create low latency, full bisection bandwidth HPC clusters.

It cool to see that Good GPU are included in clusters. But does the cloud must be used to launch a computation on the cloud, or does the cloud must be substituted by only one machine with a network interface....

Monday, November 29, 2010

IScan3D

This application for Iphone send photo content to a server that compute dense 3D point cloud and triangulate it and texture it. For sure they do not speak about precision of the output and if we could manage to have a metric reconstruction and they do not speak about the time to upload content to the server (i.e. image content that could be huge)!

It's hardly probable that they used already known product like :

Bundler/PMVS2 and home algorithm.

It's hardly probable that they used already known product like :

Bundler/PMVS2 and home algorithm.

You could manage do the "the same" by running on a server a toolkit like the following :

Images to point cloud (MultiPlatform):

http://opensourcephotogrammetry.blogspot.com/

http://opensourcephotogrammetry.blogspot.com/

Libellés :

Computer Graphics,

computer vision,

Tech demo

What Android Is ?

You want information about the Android SDk:

Take a look to the following link (It will show you with slides the principal component) :

Found here :

http://www.tbray.org/ongoing/When/201x/2010/11/14/What-Android-Is

Take a look to the following link (It will show you with slides the principal component) :

http://www.tbray.org/ongoing/When/201x/2010/11/14/What-Android-Is

Thursday, November 25, 2010

Kinect How they guess the depth : IR projection

With the desire to understand how Kinect detect the depth I have found this movie that show the IR projections.

You could see a series of dots ! They project dots of different size. So they have to solve the deformation that the IR camera see by knowing the projected IR patterns (Kind of StructuredLight system).

Source : KinectHacks

You could see a series of dots ! They project dots of different size. So they have to solve the deformation that the IR camera see by knowing the projected IR patterns (Kind of StructuredLight system).

Source : KinectHacks

Friday, November 19, 2010

London 80 giga pixel panorama

The old record of 70 giga pixel (Budapest) is now over :

London : This is an 80-gigapixel panoramic photo, made from 7886 individual images. This panorama was shot from the top of the Centre Point building in central London, in the summer of 2010. We hope that the varied sights and energy of London have been captured here in a way never done before, so that you can experience one of the world's great cities - wherever you may be right now.

For the panorama :

http://www.360cities.net/london-photo-en.html

For the hardware they used :

We would like to thank Fujitsu Technology Solutions for providing the great hardware we used to help to make this image. This panoramic photo was stitched on a Fujitsu CELSIUS workstation comprising dual 6-core CPUs, 192GB of RAM, and a 4GB graphics card. Using this excellent workstation allowed this record-breaking photo to be created a few weeks faster than would have been possible on any other available PC. You can read more about our great experience using the CELSIUS workstation on Fujitsu's blog.

London : This is an 80-gigapixel panoramic photo, made from 7886 individual images. This panorama was shot from the top of the Centre Point building in central London, in the summer of 2010. We hope that the varied sights and energy of London have been captured here in a way never done before, so that you can experience one of the world's great cities - wherever you may be right now.

For the panorama :

http://www.360cities.net/london-photo-en.html

For the Fujitsu blog entry (they talk about hardware)=>

For the hardware they used :

We would like to thank Fujitsu Technology Solutions for providing the great hardware we used to help to make this image. This panoramic photo was stitched on a Fujitsu CELSIUS workstation comprising dual 6-core CPUs, 192GB of RAM, and a 4GB graphics card. Using this excellent workstation allowed this record-breaking photo to be created a few weeks faster than would have been possible on any other available PC. You can read more about our great experience using the CELSIUS workstation on Fujitsu's blog.

Wednesday, November 17, 2010

Intel OpenCL SDK

Intel® OpenCL* SDK is an Alpha software release. It is an implementation of the OpenCL* 1.1 standard optimized for Intel® CoreTMprocessors, running on Microsoft* Windows* 7 and Windows Vista* operating systems.

This Alpha software brings OpenCL* for the CPU in support of OpenCL developers desiring CPU advantages found on many OpenCL*workloads. OpenCL* language and Application Programming Interface (API) enables you to seamlessly take advantage of the Intel® CoreTM processor benefits such as Intel® Streaming SIMD Extensions (Intel® SSE) utilization and Multi-Core scalability.

The SDK comes with four samples including two graphics related demos: GodRays andMedianFilter

Source : Intel, Geeks3D

Kinect Hacking

Thanks to OpenKinect some guys start to play with the microsoft hardware !

ofxKinect 3D draw 001 from Memo Akten on Vimeo.

3d video with Xbox Kinect from cc laan on Vimeo.

http://idav.ucdavis.edu/~okreylos/ResDev/Kinect/index.html

ofxKinect 3D draw 001 from Memo Akten on Vimeo.

3d video with Xbox Kinect from cc laan on Vimeo.

http://idav.ucdavis.edu/~okreylos/ResDev/Kinect/index.html

Ads Wall Projection

In celebration of 10 years of digital innovation, RalphLauren.com presents the ultimate fusion of art, fashion & technology in a visual feast for the 5 senses. Watch as the London flagship at 1 New Bond Street disappears before your eyes and is then transformed into a series of objects and images rendered in 3-dimensional space.

And the making of !

The Official Ralph Lauren 4D Experience – Behind The Scenes from Ralph Lauren on Vimeo.

Saturday, November 13, 2010

Kinect camera Hack !

The Kinect camera is out, and some guyalready hack the DepthMap images to make TechDemo :

XBox Kinect running on OS X ( with source code ) from Theo Watson on Vimeo.

XBox Kinect running on OS X ( with source code ) from Theo Watson on Vimeo.

Friday, November 5, 2010

Kinect camera

Here at 1.04 you will see the Kinect camera hardware (2 cameras, depth, Color).

Note; It's fun to see that a system for dissipate thermic energy is present ! (ventilator).

Source : Here

| The motorized base can rotate the top sensor bar to track you as you dance around the room |

Note; It's fun to see that a system for dissipate thermic energy is present ! (ventilator).

Source : Here

Libellés :

Augmented reality,

Computer Graphics,

computer vision,

Tech demo

Monday, October 25, 2010

Ati Display tech overview

An interesting paper that show the advantage with the displayport and multiscreen.

MST is particularly interesting !

http://www.amd.com/us/Documents/AMD_Radeon_Display_Technologies_WP.pdf

MST is particularly interesting !

http://www.amd.com/us/Documents/AMD_Radeon_Display_Technologies_WP.pdf

Friday, October 22, 2010

Monday, September 13, 2010

Quicksee acquired by Google

"Google is buying Israeli startup Quicksee, also known as MentorWave Technologies, for an estimated $10 million, reports Israeli newspaper Haaretz. The startup has raised $3 million from Ofer Hi-Tech and Doctor International Management."

"Quicksee makes 3D video tour software that can be pinned on a Google Map. It results in an effect similar to what you can see in Street View on Google Maps, allowing the viewer to pan around and see a panoramic image of a location. Except that the panoramic images do not require expensive 3D cameras mounted on top of a Google vehicle. Anybody can create one with a regular video camera and Quicksee software.

Google has not confirmed the acquisition or what it plans on doing with the team and technology, so this is just speculation. But the technology could allow Google Maps to accept geo-tagged, 3D panoramas uploaded by consumers. If you think Street View, which is shot by Google employees, creates new issues of privacy on the Internet, wait until consumers start uploading their own street views or who knows what else. Although 3D views inside buildings could be a nifty new feature for Google Places allows businesses to self-upload."

Source : TechCrunch QuickSee

Libellés :

Computer Graphics,

computer vision,

Tech demo

Friday, September 10, 2010

Real-time O(1) Bilateral Filtering

This paper comes from CVRP09

Real-time O(1) Bilateral Filtering

Qingxiong Yang, Kar-Han Tan and Narendra Ahuja, Real-time O(1) Bilateral Filtering, IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2009. [PDF [Poster | Video Demo1, Video Demo2 | Code (cpu) (at submission) (updated) (updated, with spatial quantization) | ]

Real-time O(1) Bilateral Filtering

We propose a new bilateral filtering algorithm with computational complexity invariant to filter kernel size, so calledO(1) or constant time in the literature. By showing that a bilateral filter can be decomposed into a number of constant time spatial filters, our method yields a new class of constant time bilateral filters that can have arbitrary spatial1 and arbitrary range kernels. In contrast, the current available constant time algorithm requires the use of specific spatial or specific range kernels. Also, our algorithml ends itself to a parallel implementation leading to the first real-time O(1) algorithm that we know of. Meanwhile, our algorithm yields higher quality results since we are effectively quantizing the range function instead of quantizing both the range function and the input image. Empirical experiments show that our algorithm not only gives higher PSNR, but is about 10× faster than the state-of-the-art. It also has a small memory footprint, needed only 2% of the memory required by the state-of-the-art for obtaining thes ame quality as exact using 8-bit images. We also show that our algorithm can be easily extended for O(1) median filtering. Our bilateral filtering algorithm was tested in a number of applications, including HD video conferencing,video abstraction, highlight removal, and multi-focus imaging.

Libellés :

computer vision,

Image processing,

Tech demo

A multiplatform alternative to GLUT

PEZ is a Teeny Tiny GLUT Alternative

Pez enables cross-platform development of extremely simple OpenGL apps. It’s not really a library since it’s so small. Instead of linking it in, just by using one or two header files into your project.

This tutorial is compound of the following section :

Tutorial 1: Simplest Possible Example

Tutorial 2: Hello Triangle

Tutorial 3: Ghandi Texture

Tutorial 4: Mesh Viewer

The cons I see for the moment is that Pez doesn't handle keyboard stuff and resizable windows but it provide a sufficient environment for demos ! and tutorials.

Pez enables cross-platform development of extremely simple OpenGL apps. It’s not really a library since it’s so small. Instead of linking it in, just by using one or two header files into your project.

This tutorial is compound of the following section :

Tutorial 1: Simplest Possible Example

Tutorial 2: Hello Triangle

Tutorial 3: Ghandi Texture

Tutorial 4: Mesh Viewer

The cons I see for the moment is that Pez doesn't handle keyboard stuff and resizable windows but it provide a sufficient environment for demos ! and tutorials.

Thursday, September 9, 2010

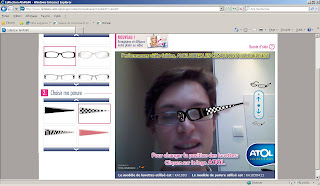

Try glasses with Augmented Reality

"Atol unveiled a new web-based experience offering people to try latest glasses collection “AK Adriana Karembeu”. This augmented reality experience uses Total Immersion D’Fusion@Home and face tracking technology."

The only major problem of such things is that people that need glasses to see things... could not see their head clearly (so it's fashion application product)... An augmented reality that could delete your old glasses and replace them by a new model could be really cool and useful.

The demo works fine, but the soft could loose the head tracking and so cumulate error and do not be able to set the glasses to the good position over your head. The rendering look a little bit plastic, but the soft could not estimate a environment map by using only the face...

|

| Here we could see that the tracking is lost ! |

Source : here and here

Libellés :

Computer Graphics,

computer vision,

Image processing,

Tech demo

Wednesday, September 8, 2010

Crytek adds Stereoscopic 3D support to CryENGINE 3

Crytek announce that the CryEngine 3 support stereoscopic display with a very small overhead through the CryENGINE 3 Screen Space Re-Projection Stereo (SSRS) solution.

Source : CryEngine press release

Source : CryEngine press release

CMake tips

CMake is a cool tool to perform cross-platform system for build automation. But sometime you want a better control through the command line. For example you are on a linux 64 bit machine and by default you will compile 64 bits binaries. So if you want to compile 32 bits binaries on a 64 bits machine you have to use some parameters to configure CFLAGS, CXXFLAGS:

How to control compiler flags :

If you want to specify your own compiler flags, you can

Set environent variable CFLAGS, CXXFLAG

or

cmake . -DCMAKE_C_FLAGS=

When providing own compiler flags, you might want to specify CMAKE_BUILD_TYPE as well.

For example if you want to do release build for 32 bit on 64 bit Linux machine ,you do cmake -DCMAKE_C_FLAGS=-m32 --DCMAKE_CXX_FLAGS=-m32

Tuesday, September 7, 2010

Geometric Computer Vision courses

Here a course on geometric computer vision from Dr Marc Pollefeys

Course Objectives

After attending this course students should:

Understand the concepts that allow recovering 3D shape from images.

Have a good overview of the state of the art in geometric computer vision.

Be able to critically analyze and asses current research in the area

Implement components of a 3D photography system.

Course Topics

The course will cover the following topics a.o. camera model and calibration, single-view metrology, triangulation, epipolar and multi-view geometry, two-view and multi-view stereo, structured-light, feature tracking and matching, structure-from-motion, shape-from-silhouettes and 3D modeling and applications.

Target Audience

The target audience of this course are Master or PhD students, or advanced Bachelor students, that are interested in learning about geometric computer vision and related topics.

Course Objectives

After attending this course students should:

Understand the concepts that allow recovering 3D shape from images.

Have a good overview of the state of the art in geometric computer vision.

Be able to critically analyze and asses current research in the area

Implement components of a 3D photography system.

Course Topics

The course will cover the following topics a.o. camera model and calibration, single-view metrology, triangulation, epipolar and multi-view geometry, two-view and multi-view stereo, structured-light, feature tracking and matching, structure-from-motion, shape-from-silhouettes and 3D modeling and applications.

Target Audience

The target audience of this course are Master or PhD students, or advanced Bachelor students, that are interested in learning about geometric computer vision and related topics.

Libellés :

Computer Graphics,

computer vision,

Image processing,

Photography

Panorama Tracking and Mapping on Mobile Phones

For the tech side :

http://studierstube.icg.tu-graz.ac.at/handheld_ar/naturalfeature.php

Related paper :

Real-time Panoramic Mapping and Tracking on Mobile Phones

Online Creation of Panoramic Augmented Reality Annotations on Mobile Phones

Authors: Tobias Langlotz, Daniel Wagner, Alessandro Mulloni, Dieter Schmalstieg

Details:Accepted for IEEE Pervasive Computing

We present a novel approach for creating and exploring annotations in place using mobile phones. The system can be used in large-scale indoor and outdoor scenarios and offers an accurate mapping of the annotations to physical objects. The system uses a drift-free orientation tracking based on panoramic images, which can be initialized using data from a GPS sensor. Given the current position and view direction, we show how annotations can be accurately mapped to the correct objects, even in the case of varying user positions. Possible applications range from Augmented Reality browsers to pedestrian navigation.

Libellés :

Computer Graphics,

computer vision,

Image processing,

Panoramic images,

Tech demo

Friday, September 3, 2010

Unreal Engine 3 on Ipad, Itouch, I

Epic Citadel is an application that showcases the technical capabilities of the Unreal Engine 3 on iOS devices like iPad and iPhone.

It looks good !

| http://epicgames.com/technology/epic-citadel |

It looks good !

Thursday, September 2, 2010

Kmeans a tutorial

Here you will find a short tutorial of the process of the KMean algorithm.

K-Means is a clustering algorithm. That means you can “group” points based on their neighbourhood. When a lot of points a near by, you mark them as one cluster. With K-means, you can find good center points for these clusters.

K-Means is a clustering algorithm. That means you can “group” points based on their neighbourhood. When a lot of points a near by, you mark them as one cluster. With K-means, you can find good center points for these clusters.

Libellés :

Computer Graphics,

computer vision,

Image processing,

Tech demo

SIFT an illustrated tutorial

On the web we found a bunch of things... and sometimes interesting things.

Here a point per point study of the SIFT process. (I'm not agree with all the illustration, but the idea is here and could help people to understand the meaning of each process).

1. Constructing a scale space

2. LoG Approximation

3. Finding keypoints

4. Get rid of bad key points

5. Assigning an orientation to the keypoints

6. Generate SIFT features

| http://www.aishack.in/2010/05/sift-scale-invariant-feature-transform/ |

Here a point per point study of the SIFT process. (I'm not agree with all the illustration, but the idea is here and could help people to understand the meaning of each process).

1. Constructing a scale space

2. LoG Approximation

3. Finding keypoints

4. Get rid of bad key points

5. Assigning an orientation to the keypoints

6. Generate SIFT features

Libellés :

computer vision,

Image processing,

Tech demo

Wednesday, September 1, 2010

Popcode AR for mobile phone

"Popcode is what is known in the trade as a Markerless Augmented Reality platform. That means you can add additional content onto any image (providing it has enough texture). You do not need to print large black-and-white markers to be able to add Augmented Reality (AR) content to the world using Popcode." The product was realized by Extra Reality Ltd.

For the tech side :

The founder are Simon Taylor, Dr. Tom Drummond (The thesis supervisor of Simon) and Connell Gauld (Mobile platform coder expert).

=> They used Fast corder detector, HIPS matching.

I let you see the SDK !

And the demos :

Tuesday, August 31, 2010

I have tested Kinect !

Kinect from Microsoft works well. Sometimes it lag, but it works quite good.

You are able to recognize your avatar posture immediately, normal, it's you !

When you play you move a lot, and you have a lot of fun.

I have got a good score in the three mini games I have tested so I got the right to record my own dance and see some avatar make the same moves as me in music !

WII tested, Kinect Tested.... Remains the PlaystationMove...

You are able to recognize your avatar posture immediately, normal, it's you !

When you play you move a lot, and you have a lot of fun.

I have got a good score in the three mini games I have tested so I got the right to record my own dance and see some avatar make the same moves as me in music !

WII tested, Kinect Tested.... Remains the PlaystationMove...

CUDA-Surf

In computer vision we always want something fast... This implementation of SURF rely on CUDA on so work on GPU.

We do not have any information about the timings...

http://www.mis.tu-darmstadt.de/surf

We do not have any information about the timings...

http://www.mis.tu-darmstadt.de/surf

Libellés :

computer vision,

GPGPU,

Image processing,

Tech demo

Monday, August 30, 2010

Friday, August 27, 2010

OpenCL™ Optimization Case Study: Simple Reductions

This study show that even on the GPU the naive implementation works but a clever scheme could improve the effectiveness of the approach.

The article is well explained with illustration to show each clever idea to use the full SMID machine. It explain how to implement parallel Reduction operation on GPU (A reduce operation with a given predicat => find a min, max ...).

Final code :

__kernel

void reduce(__global float* buffer,

__const int block,

__const int length,

__global float* result) {

int global_index = get_global_id(0) * block;

float accumulator = INFINITY;

int upper_bound = (get_global_id(0) + 1) * block;

if (upper_bound > length) upper_bound = length;

while (global_index < upper_bound) {

float element = buffer[global_index];

accumulator = (accumulator < element) ? accumulator : element;

global_index++;

}

result[get_group_id(0)] = accumulator;

}

Source : AMD

The article is well explained with illustration to show each clever idea to use the full SMID machine. It explain how to implement parallel Reduction operation on GPU (A reduce operation with a given predicat => find a min, max ...).

| Associative Reduction Tree and SIMD Mapping |

| Commutative Reduction and SIMD Mapping |

| Two-stage Reduction |

__kernel

void reduce(__global float* buffer,

__const int block,

__const int length,

__global float* result) {

int global_index = get_global_id(0) * block;

float accumulator = INFINITY;

int upper_bound = (get_global_id(0) + 1) * block;

if (upper_bound > length) upper_bound = length;

while (global_index < upper_bound) {

float element = buffer[global_index];

accumulator = (accumulator < element) ? accumulator : element;

global_index++;

}

result[get_group_id(0)] = accumulator;

}

Source : AMD

Libellés :

Opencl,

Programmation,

Tech demo

Thursday, August 26, 2010

Natal/Kinect core information

Here some images of the Natal core :

=> We could see the depth images and the segmentation (Find the player and articulation).

here a video that illustrate all the process :.

=> We could see the depth images and the segmentation (Find the player and articulation).

here a video that illustrate all the process :.

Monday, August 23, 2010

Smartphone Gamut !

here a study that compare the Gamut coverage of our smartphones screens !

The palm go to HTC Legend !

The palm go to HTC Legend !

Subscribe to:

Comments (Atom)